Enhancing State-of-Health Estimation Through Deep Architecture Modification of LSTM Networks

Copyright Ⓒ 2024 KSAE / 219-05

This is an Open-Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License(http://creativecommons.org/licenses/by-nc/3.0) which permits unrestricted non-commercial use, distribution, and reproduction in any medium provided the original work is properly cited.

Abstract

An accurate assessment of the State-of-Health(SoH) of Li-ion batteries is crucial in ensuring their reliability, safety, and longevity. However, traditional SoH estimation methods often struggle to identify the complex degradation patterns that batteries exhibit over their lifespan. To address this issue, an innovative approach that involves the modification of the architecture for deeper learning and comprehensive utilization of battery capacity, cycle, and SoH data based on Long Short-Term Memory(LSTM) networks was proposed. Through meticulous data preprocessing, we will bridge the gap between raw data and meaningful insights, thus facilitating a transformative shift in battery health assessment. Our model adeptly captures the cumulative effects of repeated charge-discharge cycles, ensuring accurate predictions over an extended battery lifespan. The proposed model showed significant improvement by using the NASA battery aging dataset, resulting in 39.1 % and 69.35 % accuracy when employing 50 % and 70 % training data, respectively. The observed exceptional accuracy highlights the effectiveness of our approach by addressing the complexities of battery degradation.

Keywords:

Deep learning, Lithium-ion battery, Long Short-Term Memory(LSTM), State-of-Health(SoH)1. Introduction

The ongoing global shift towards sustainable energy solutions has driven remarkable growth in the utilization of lithium-ion(Li-ion) batteries across various sectors. Li-ion batteries have become ubiquitous power sources in applications ranging from portable electronics to electric vehicles.1,2) Ensuring reliable performance and longevity necessitates an accurate assessment of the battery’s state-of-health(SoH), which quantifies the degradation level and remaining useful life.2,3) SoH estimation is a complex task influenced by various factors such as temperature, current profiles, and battery chemistry. There are many challenges associated with Li-ion battery SoH estimation, prompting researchers to further advance in the battery management field.4) To maximize the performance and longevity of these batteries, efficient battery SoH estimation strategies have become essential. Both traditional and machine learning-based methods contribute to the advancement of this field.

The literature on battery management encompasses a wide variety of strategies. However, most methods operate on the assumption of identical cell characteristics, often neglecting the underlying complexities of battery aging and its influence on each battery cell’s performance. Battery SoH estimation is crucial in ensuring longevity, performance, and safety. Various methodologies have been employed for battery SoH estimation, ranging from traditional techniques based on voltage, impedance, and capacity measurements5-7) to more contemporary approaches utilizing machine learning-based methods.3,8-13) Machine learning-based methods have been leveraged to analyze complex data patterns and relationships. In addition, machine learning-based methods are capable of capturing non-linear dependencies, temporal trends, and sophisticated interactions between various battery parameters. This adaptability makes machine learning-based approaches well-suited for addressing the challenges posed by battery aging, uncovering hidden correlations that traditional methods overlook. Machine learning-based models have their own advantages and disadvantages. The Support Vector Machine(SVM) aims to classify the battery’s SoH based on input features and handle complex relationships.8) However, SVMs might struggle with the implications of battery behavior over time due to reliance on fixed kernel functions, which can result in insignificant SoH estimation. In the field of ensemble learning, Random Forest models have been applied to battery SoH estimation. Random Forest models aggregate predictions from multiple decision trees to enhance overall accuracy. However, Random Forest struggles to capture the temporal dependencies and complex patterns that define battery aging, which can lead to reduced performance. An ensemble learning approach that fuses data from various sources, including voltage profiles, temperature, and discharge rates, to estimate battery SoH was presented.9) The ensemble technique enhances prediction accuracy by combining outputs from multiple models. However, the computational complexity of ensemble methods might limit their real-time applicability in resource-constrained systems. K-Nearest Neighbors(KNN) is a simple yet effective technique used for battery SoH estimation. It relies on the similarity between battery behaviors to predict SoH values. However, KNN’s limitation lies in its inability to incorporate long-term trends and dependencies among data points. Consequently, it might exhibit lower performance, especially when dealing with complex battery aging development. The information from electrochemical impedance spectroscopy and support vector machines is fused to enhance SoH estimation accuracy.10) The combination of different data sources boosts the model’s robustness. However, the method might require extensive feature engineering and can be sensitive to noise present in the impedance data. An online SoH estimation approach using incremental machine learning, allowing continuous adaptation to change battery conditions, was proposed.11) The method offers real-time monitoring and prediction capabilities; however, the approach faces challenges when dealing with sudden and extreme variations in operating conditions, which could affect its adaptability and reliability. On the other hand, Artificial Neural Networks(ANN) are often relied upon for battery SoH estimation. ANNs are capable of learning complex relationships between input features, such as voltage profiles and cycling patterns, and the corresponding SoH values. However, while ANNs exhibit some capability in capturing patterns, their capacity to handle long-term dependencies and intricate temporal relationships remains limited. To bridge the gap between the l imitations of existing machine learning-based methods, a type of RNN, specifically Long Short-Term Memory(LSTM) networks, has gained prominence in SoH estimation due to their capability to capture temporal dependencies in sequential data. However, standard LSTM architectures may struggle to capture intricate patterns in the data, leading to suboptimal SoH predictions. To overcome these limitations, researchers have proposed various modifications to LSTM architectures, such as incorporating attention and gating mechanisms.13) These efforts have demonstrated some enhancements, but conventional LSTM models often face limitations in accurately estimating SoH, necessitating innovative architectural modifications to achieve improved accuracy.

To bridge the gap between the limitations of existing machine learning-based methods, a novel approach to improving SoH estimation accuracy by modifying the architecture of conventional LSTM networks was proposed. The LSTM’s ability to capture temporal dependencies and complex patterns makes it an ideal candidate for modeling the dynamic correlation between cell behaviors, charge-discharge cycles, and aging effects. By training the LSTM on historical battery data, including voltage profiles, cycling patterns, and SoH indicators, the model learns to predict cell behavior under varying conditions. By incorporating SoH indicators as additional inputs to the LSTM, the model gains the ability to make informed decisions that consider the health status of individual cells. By implying a deep architecture modification on the standard LSTM, significant enhancements in accuracy can be made, positioning it as a promising solution for more reliable SoH estimation. Therefore, the crucial aspects and limitations that have been faced in the existing literature will be properly addressed.

In this paper, a novel deep architecture modification to LSTM networks for accurate SoH estimation is presented. The proposed architecture includes multiple LSTM layers with increased depth, followed by dense layers to capture complex temporal relationships inherent in Li-ion battery data. Activation functions such as the scaled exponential linear unit(SELU) are employed to mitigate the vanishing gradient problem and enhance learning efficiency. This deep architecture modification enables the network to learn more complex patterns and dependencies, leading to improved SoH estimation accuracy. Furthermore, capacity, life cycle, and SoH data were calculated and incorporated as input parameters to contribute to achieving an unprecedented level of accuracy and reliability in predicting battery health. Through a series of simulations and experiments, the proposed method aims to demonstrate the effectiveness of the approach over other machine learning-based methods and conventional SoH estimation techniques, even with a limited amount of battery data. Moreover, the proposed method seeks to establish a new standard for battery management strategies that holistically considers both short-term performance, long-term health, and functionality of Li-ion batteries in a dynamically evolving energy landscape.

2. Methodology

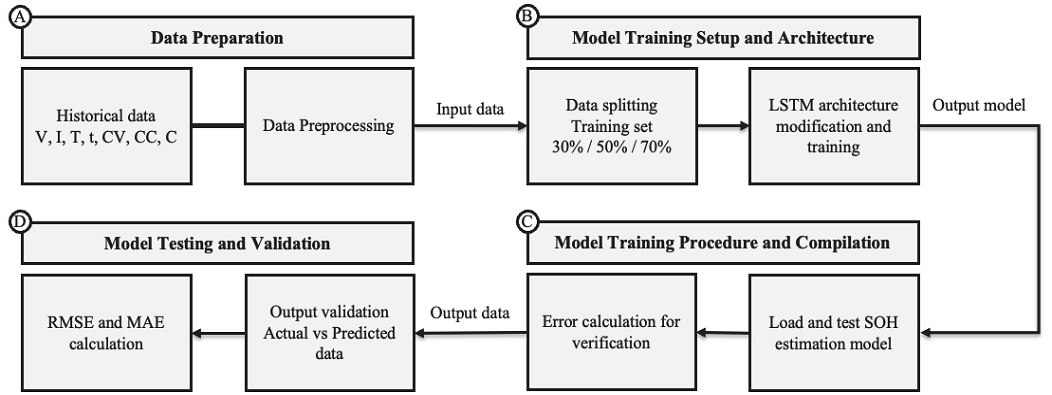

In this section, the novel deep architecture modification to LSTM networks for a more accurate and reliable SoH estimation is introduced. Various discussions will be presented, covering dataset preparation and formulation, model training setup and procedure, as well as deep architecture modification. The overall process flow of the proposed deep LSTM-based SoH estimation model is shown in Fig. 1.

2.1 Data Preparation

In this paper, the model is validated using a Li-ion battery aging dataset provided by the NASA public data repository. Two battery cell datasets, B05 and B18, were utilized, each recording information about the charging, discharging, and impedance of the battery cells. The Li-ion batteries underwent three different operational profiles: charge, discharge, and impedance measurement at room temperature. Charging was conducted in constant current(CC) mode at 1.5 A until the battery voltage reached 4.2 V, then continued in constant voltage(CV) mode until the charge current dropped to 20 mA. Discharging was performed at a constant current(CC) level of 2 A until the battery voltage decreased to 2.7 V. Impedance measurement was conducted through electrochemical impedance spectroscopy with a frequency sweep from 0.1 Hz to 5 kHz. The repeated charge and discharge cycles resulted in accelerated aging of the batteries, while impedance measurements provided insights into the internal battery parameters that change as aging progresses. The experiments were terminated when the batteries reached their end-of-life criteria, which was a 30 % reduction in rated capacity(from 2 Ah to 1.4 Ah).

During data preprocessing, the capacity data, based on historical data, was used to compute the actual SoH per life cycle of the battery. The actual SoH is defined as:

| (1) |

where Qrated refers to the initial battery rated capacity provided by the manufacturer, set at 2 Ah. This means that there is a fixed value of 2 Ah for the Li-ion battery cell datasets. In each battery cell dataset, the number of cycles varies as cells were discharged under different battery voltages: 2.7 V, 2.5 V, and 2.2 V. The number of cycles obtained was 168 for battery cell B05 and 132 for B18. On the other hand, Qn represents the battery capacity(Ah) for discharge until the desired discharging profile per battery cell(2.7 V, 2.5 V, or 2.2 V) was reached, based on the time vector for each battery life cycle in seconds. The actual SoH, along with Qn and the number of cycles, were used in the training and testing of the SoH estimation model, as well as in data cleansing and normalization. Missing values and outliers were also carefully handled to avoid underfitting.

The dataset was divided into training and testing subsets, with 70 %, 50 %, and 30 % of the data assigned for training and the remaining percentage for testing. This split ensures that the model learns from a substantial portion of the data while reserving a significant portion for evaluation. Furthermore, a function was employed where sequential training and testing data points were generated. In this function, each sequence comprises consecutive SoH values, with the subsequent SoH value serving as the corresponding label. This process involved creating input-output pairs from the historical data, where a sequence of previous SoH values serves as the input and the next SoH value is the output.

2.2 Model Training Setup and Architecture

In the context of advancing SoH estimation for Li-ion batteries, our approach encompasses a sophisticated model training process designed to harness deep learning capabilities. The architecture configuration and training parameters are meticulously arranged using TensorFlow, a leading machine learning library, to ensure optimal performance. The model architecture follows a sequential structure, comprising multiple LSTM layers, known for their proficiency in capturing sequential dependencies. It begins with a dual-layered LSTM design; the initial layer, featuring 256 hidden units, is tailored to retain sequence outputs, facilitating the capture of complex temporal trends. Subsequently, another LSTM layer with an identical number of units is introduced, operating without sequence outputs to encapsulate the accumulated temporal insights. The architecture’s depth is enhanced by densely connected layers, incorporating 256 units to heighten the capacity for capturing complex inter-feature relationships. This is followed by two additional dense layers with 128 and 1 unit(s) respectively, culminating with a linear activation function, suitable for SoH prediction tasks. The model’s architectural configuration is concisely summarized, providing a comprehensive overview of its layer composition and trainable parameters.

The adopted model architecture and training setup offer several advantages that collectively enhance the accuracy and effectiveness of SoH estimation for Li-ion batteries. Incorporating LSTM layers enables the model to capture complex temporal patterns characteristic of battery degradation, essential for accurate SoH prediction as battery performance evolves over time. The multi-layered structure, featuring deep LSTM and densely connected layers, empowers the model to discern complex relationships among diverse input features, providing deeper insight into the mechanisms driving battery degradation. Utilizing the Adam optimizer with a carefully chosen learning rate expedites convergence to optimal weight values, ensuring efficient training and quicker attainment of accurate predictions. Furthermore, mechanisms like early stopping and model checkpointing contribute to robust training, preventing overfitting and preserving the best-performing model iteration. Lastly, the strategic data partitioning strategy and the decision to maintain sequence integrity without shuffling sustain the model’s alignment with the temporal nature of battery SoH prediction. Collectively, these features underscore the model’s capacity to comprehend complex battery behaviors, paving the way for more reliable battery management systems.

2.3 Model Training Procedure

The primary innovation in this study lies within the LSTM architecture, tailored to capture complex temporal patterns and thereby enhance SoH estimation. The architectural modifications include increased depth, utilization of the SELU activation function, addition of dense layers, and alignment with the output layer. The modified architecture incorporates two LSTM layers, each with 256 units. This increased depth enables the network to comprehend hierarchical features and intricate temporal dependencies within the data. The use of the SELU activation function alleviates the vanishing gradient issue, facilitating stable training across the deep architecture. Additionally, the architecture integrates supplementary dense layers with 256 and 128 units, both employing SELU activation functions. These layers further enhance the model’s capacity to discern complex patterns and relationships intrinsic to SoH data. The output layer adopts a linear activation function, aligning with the continuous nature of the SoH value to be predicted. The modified LSTM architecture is compiled using the Adam optimizer.

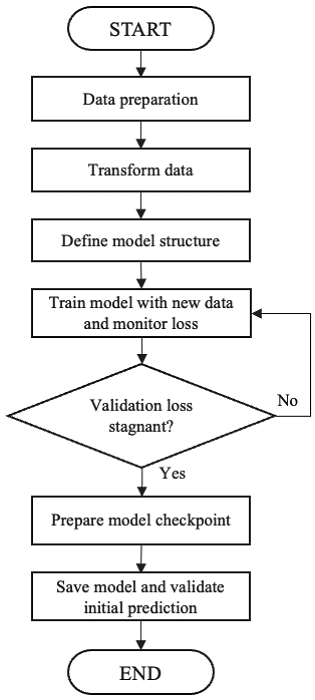

To mitigate overfitting and conserve the best model iteration, the training process integrates early stopping and model checkpoint mechanisms. Early stopping monitors the validation loss and halts training if no improvement is observed over a set number of epochs. The model checkpoint saves the optimal model weights based on the validation loss.

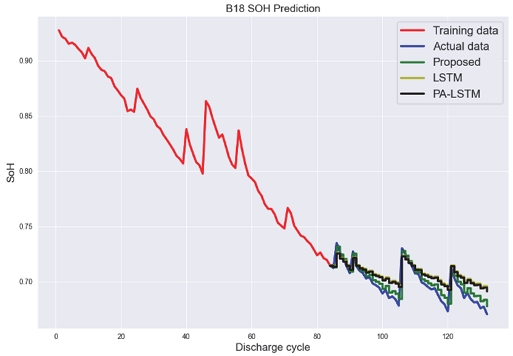

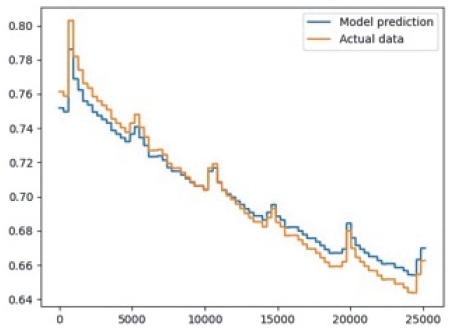

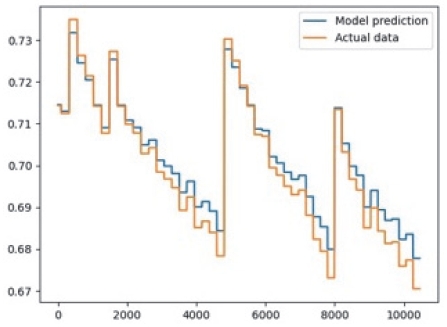

The deep LSTM model is trained with the generated training sequences, involving the execution of 100 epochs with a batch size of 32, allocating 20 % of the training data as a validation set to monitor training progress and detect overfitting tendencies, integrating early stopping and model checkpoint callbacks to ensure model stability and optimal weight conservation, and disabling shuffle to retain the sequence order in the dataset. Upon completion of training, the model’s architecture is saved in JSON format, while the learned weights are stored in HDF5 format for subsequent use. To test the effectiveness and assess the model’s performance, visualizations are generated. Loss curves are plotted to visualize the training and testing progress, and the model’s initial predictions are graphed against actual SoH values for both training and testing datasets, as shown in Fig. 2 and Fig. 3. Each battery cell dataset was trained under three different data separations (30 %, 50 %, and 70 %) to observe how the model learns from a substantial portion of data. Fig. 4 summarizes the flowchart of training the model as discussed in this section.

Initial SoH model prediction for battery cell B05 for both training and testing datasets vs. the actual SoH data

Initial SoH model prediction for battery cell B18 for both training and testing datasets vs. the actual SoH data

2.4 Deep LSTM-based SoH Estimation Model

The innovation extends beyond the architecture itself, encompassing a comprehensive data preprocessing pipeline that significantly contributes to the accuracy and reliability of the proposed deep LSTM-based SoH estimation model. To demonstrate this, the NASA dataset, which provides charge, discharge, and impedance values, makes it a valuable resource in generating crucial parameters for training the model. Using the dataset information, we were able to generate the capacity, life cycle, and SoH data of each Li-ion battery cell. Incorporating these parameters as input enhances the accuracy and reliability. Capacity, life cycle, and SoH are fundamental attributes encapsulating the battery’s health state at different levels of granularity. Capacity reflects the energy storage capacity, life cycle denotes the number of charge-discharge cycles, and SoH quantifies the degradation level. Including these parameters, the model gains access to rich contextual information, enabling it to discern degradation patterns and capturing the complex relationships between capacity loss, cycle count, and overall battery health.

Moreover, the LSTM architecture’s inherent ability to capture temporal dependencies is complemented by including cycle information. The cycle count serves as a temporal context, allowing the model to learn how degradation evolves over time. This is particularly relevant for batteries where degradation accumulates progressively with each cycle. By incorporating cycle information, the model accounts for the cumulative effect of repeated charge-discharge cycles on SoH. Additionally, the use of cycle data empowers the model to distinguish between different life cycle stages, adapting predictions to specific degradation characteristics of each stage, leading to more precise and tailored SoH estimates.

Furthermore, combining capacity, life cycle, and SoH data allows the model to uncover complex relationships and interactions. Capacity loss might accelerate at certain cycle counts or under specific SoH levels. By analyzing these factors jointly, the model captures non-linear degradation patterns critical for accurate SoH estimation. The model gains the capability to extrapolate from historical data, accurately projecting the trajectory of SoH across extended battery life cycles. The data preprocessing strategy enhances predictive accuracy, bridging the gap between raw data and insightful degradation insights.

By modifying the LSTM network into a deep architecture, the proposed model captures complex temporal dependencies present in battery degradation data. Multiple layers of LSTMs promote a deeper understanding of complex interactions over time. The architecture ability to process sequential data and learn long-range dependencies uncovers implications in degradation patterns that might be elusive to shallower architectures. The choice of the SELU activation function within LSTM layers enhances convergence and alleviates vanishing gradient issues. The integration of dense layers promotes the extraction of higher-level features, contributing to more accurate SoH prediction while maintaining a low number of epochs for training, leading to enhanced model generalizability.

This deep architecture marks a significant departure from conventional shallow models, setting it apart from existing machine learning-based SoH estimation approaches. Extensive experimentation and validation show that the proposed model outperforms these methods in terms of prediction accuracy, especially during extended battery life cycles.

In essence, incorporating capacity, life cycle, and SoH data provides a comprehensive set of features, equipping the model with a holistic understanding of battery degradation. The additional layers allow the model to extract more abstract representations, transforming raw input data into informative features that drive accurate predictions. The deep LSTM architecture enhances the model capability to learn hierarchical features from the generated input parameters, enabling it to accurately learn the complex relationships and patterns underlying SoH changes, resulting in high accuracy and enhanced reliability of the SoH estimation model.

3. Results and Discussion

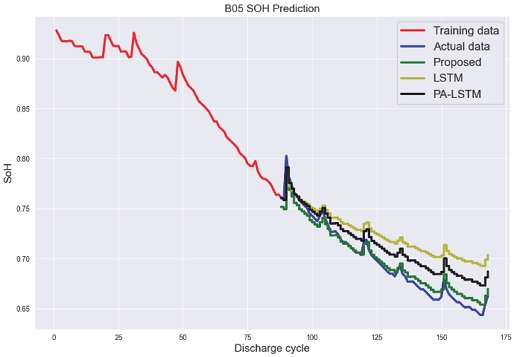

This section presents the results of our comprehensive evaluation of SoH estimation models using the NASA Li-ion battery aging dataset, focusing on battery cells B05 and B18. In order to provide a robust comparison, we evaluated the performance of three models: the LSTM,12) the PA-LSTM,13) and our novel deep architecture modification of the LSTM network. The rationale for this selection is grounded in their methodological similarities: all three models are based on LSTM architectures and utilize the NASA public dataset for training and testing. This alignment in methodologies facilitates a direct and equitable comparison of the model performance in the SoH estimation. Table 1 illustrates the complexity of the three models. Each model was built using the Python Tensorflow library, and the table was constructed based on data obtained from the ‘summary’ function. The LSTM12) comprises 4 layers and contains 206,465 parameters. The PA-LSTM13) comprises 3 layers and has 21,122 parameters. In contrast, our model comprises 5 layers and contains a total of 888,321 parameters, reflecting its enhanced complexity and potential for capturing more nuanced patterns in the data. The evaluation was conducted using varying training data percentages of 30 %, 50 %, and 70 % for battery cell data B05 and B18. Table 2 shows the comparison of results among the three models, obtained through proper analysis using the RMSE metric. The comparison reveals distinct performance trends across the models and training data percentages. As illustrated in Table 2, the standard LSTM exhibits the highest RMSE values across all training data percentages, indicating suboptimal predictive accuracy. The PA-LSTM demonstrates improved performance compared to the LSTM, achieving notably lower RMSE values. On the other hand, our proposed model architecture attains competitive RMSE values, showcasing its ability to capture complex patterns even with a relatively lower percentage of training data. Notably, our proposed architecture consistently outperforms both the LSTM and PA-LSTM across all training data percentages. This consistency highlights its efficacy in learning complex temporal dependencies.

Additionally, the evaluation regarding the improvement based on the results comparison is shown in Table 3. The proposed model demonstrates significant improvements in predictive accuracy over the existing LSTM models. At training data percentages of 30 %, 50 %, and 70 %, the proposed model achieves substantially lower RMSE values of 0.0109, 0.0067, and 0.0053, respectively, in contrast to the the LSTM12) from B05 data, which has RMSE values of 0.1384, 0.030, and 0.014. This translates to improvements of approximately 92.12 %, 77.67 %, and 62.14 % in predictive accuracy, respectively. For battery cell B18 data, the proposed model achieved RMSE values of 0.0111, 0.0067, and 0.0038, while the LSTM model had RMSE values of 0.0654, 0.056, and 0.0143, respectively. The proposed model provides a significant improvement compared with the LSTM model of approximately 83.03 %, 88.04 %, and 73.43 %. Furthermore, the proposed model’s performance outperforms the PA-LSTM13) as well. In 30 %, 50 %, and 70 % training data sets, the proposed model outperforms the PA-LSTM model, which yields RMSE values of 0.0119, 0.011, and 0.006 from B05 data; and 0.0208, 0.0152, and 0.0124 from B18 data, respectively. This reflects enhancements of around 8.40 %, 39.1 %, and 11.67 % for B05 data; and 46.63 %, 55.92 %, and 69.35 % for B18 data in predictive accuracy, attesting to the robustness of the proposed model across various training data scenarios. Moreover, the performance improvement for B18 is more pronounced than for B05. This is attributed to the larger SoH fluctuations in B18, making its prediction more challenging. Nonetheless, our proposed algorithm demonstrates consistent RMSE across different cell types, resulting in a more significant performance enhancement when applied to B18 data.

Graphical plot visualizations of the three LSTM-based SoH estimation model comparisons for battery cell B05 and B18 are illustrated in Fig. 5 and Fig. 6, respectively. The valuable improvement in prediction accuracy across different training data proportions highlights the effectiveness of the proposed model in capturing complex patterns and temporal dependencies within the battery cell data.

Comparison of SoH estimation results among the proposed model, LSTM,12) and PA-LSTM13) with NASA battery cell B05

4. Conclusion

In this paper, we introduced a novel SoH estimation method for Li-ion batteries using a modified deep LSTM architecture. By integrating key parameters like capacity, life cycle, and SoH data, our model significantly improves accuracy in predicting battery health, effectively capturing complex degradation patterns. We validated our model using NASA’s public Li-ion battery dataset, comparing it with existing LSTM models across various data percentages (30 %, 50 %, and 70 %). The proposed model demonstrates significant performance enhancements over existing LSTM models. For battery cell B05, the improvement was at least 8.40 % and at most 92.12 %. Similarly, for battery cell B18, the model showed a minimum improvement of 46.63 % and a maximum of 88.04 %. These results underline the model potential for advancing battery management systems and predictive maintenance, extending battery lifespan, and ensuring efficient performance across industries. While our proposed algorithm increases accuracy by enhancing the depth of the LSTM layers, this approach does raise concerns about increased complexity. In future research, we intend to address this by applying optimization techniques to maintain performance while optimizing the model’s architecture, thus addressing both the concerns of increased complexity and the need for novelty in our approach.

Acknowledgments

This work was supported by the National Research Foundation of Korea(2021R1I1A3056900).

References

-

J. Kim, J. Park, S. Kwon, S. Sin, B. Kim and J. Kim, “Remaining Useful Life Prediction of Lithium-ion Batteries through Empirical Model Design of Discrete Wavelet Transform Based on Particle Filter Algorithm,” Transactions of KSAE, Vol.30, No.3, pp.199-206, 2022.

[https://doi.org/10.7467/KSAE.2022.30.3.199]

-

C. Song, B. Gu, W. Lim, S. Park and S. Cha, “A Energy Management Strategy for Hybrid Electric Vehicles Using Deep Q- Networks,” Transactions of KSAE, Vol.27, No.11, pp.903-909, 2019.

[https://doi.org/10.7467/KSAE.2019.27.11.903]

-

M. A. Kamali, A. C. Caliwag and W. Lim, “Novel SOH Estimation of Lithium-Ion Batteries for Real-time Embedded Applications,” IEEE Embedded Systems Letters, Vol.13, No.4, pp.206-209, 2021.

[https://doi.org/10.1109/LES.2021.3078443]

-

H. M. O. Canilang, A. C. Caliwag and W. Lim, “Design, Implementation, and Deployment of Modular Battery Management System for IIoT-Based Applications,” IEEE Access, Vol.10, pp.109008-109028, 2022.

[https://doi.org/10.1109/ACCESS.2022.3214177]

-

S. M. Qaisar, “Li-Ion Battery SoH Estimation Based on the Event-driven Sampling of Cell Voltage,” ICCIS Conference Proceedings, pp.1-4, 2020.

[https://doi.org/10.1109/ICCIS49240.2020.9257629]

-

J. Sihvo, T. Roinila, T. Messo and D. I. Stroe, “Novel Online Fitting Algorithm for Impedance-based State Estimation of Li-ion Batteries,” 45th Annual Conference of the IEEE Industrial Electronics Society, pp.4531-4536, 2019.

[https://doi.org/10.1109/IECON.2019.8927338]

-

J. Tian, R. Xiong and Q. Yu, “Fractional-Order Model-Based Incremental Capacity Analysis for Degradation State Recognition of Lithium-Ion Batteries,” IEEE Transactions on Industrial Electronics, Vol.66, No.2, pp.1576-1584, 2018.

[https://doi.org/10.1109/TIE.2018.2798606]

-

X. Feng, C. Weng, X. He, X. Han, L. Lu, D. Ren and M. Ouyang, “Online State-of-Health Estimation for Li-Ion Battery Using Partial Charging Segment Based on Support Vector Machine,” IEEE Transactions on Vehicular Technology, Vol.68, No.9, pp.8583-8592, 2019.

[https://doi.org/10.1109/TVT.2019.2927120]

-

K. Yang, L. Zhang, Z. Zhang, H. Yu, W. Wang, M. Ouyang, C. Zhang, Q. Sun, X. Yan, S. Yang and X. Liu, “Battery State of Health Estimate Strategies: From Data Analysis to End-Cloud Collaborative Framework,” Batteries, Vol.9, No.7, Paper No.351, 2023.

[https://doi.org/10.3390/batteries9070351]

-

W. Luo, A. U. Syed, J. R. Nicholls and S. Gray, “An SVM-Based Health Classifier for Offline Li-Ion Batteries by Using EIS Technology,” Journal of The Electrochemical Society, Vol.170, No.3, pp.1-10, 2023.

[https://doi.org/10.1149/1945-7111/acc09f]

-

C. She, Y. Li, C. Zou, T. Wik, Z. Wang and F. Sun, “Offline and Online Blended Machine Learning for Lithium-Ion Battery Health State Estimation,” IEEE Transactions on Transportation Electrification, Vol.8, No.2, pp.1604-1618, 2022.

[https://doi.org/10.1109/TTE.2021.3129479]

-

F. Zhou, P. Hu and X. Yang, “RUL Prognostics Method Based on Real Time Updating of LSTM Parameters,” 2018 Chinese Control and Decision Conference(CCDC), pp.3966-3971, 2018.

[https://doi.org/10.1109/CCDC.2018.8407812]

-

J. Qu, F. Liu, Y. Ma and J. Fan, “A Neural-Network-Based Method for RUL Prediction and SOH Monitoring of Lithium-Ion Battery,” IEEE Access, Vol.7, pp.87178-87191, 2019.

[https://doi.org/10.1109/ACCESS.2019.2925468]